Sensors are key elements of any instrument. Hence, they deserve special attention in the preliminary design phase of the European Solar Telescope as we show below.

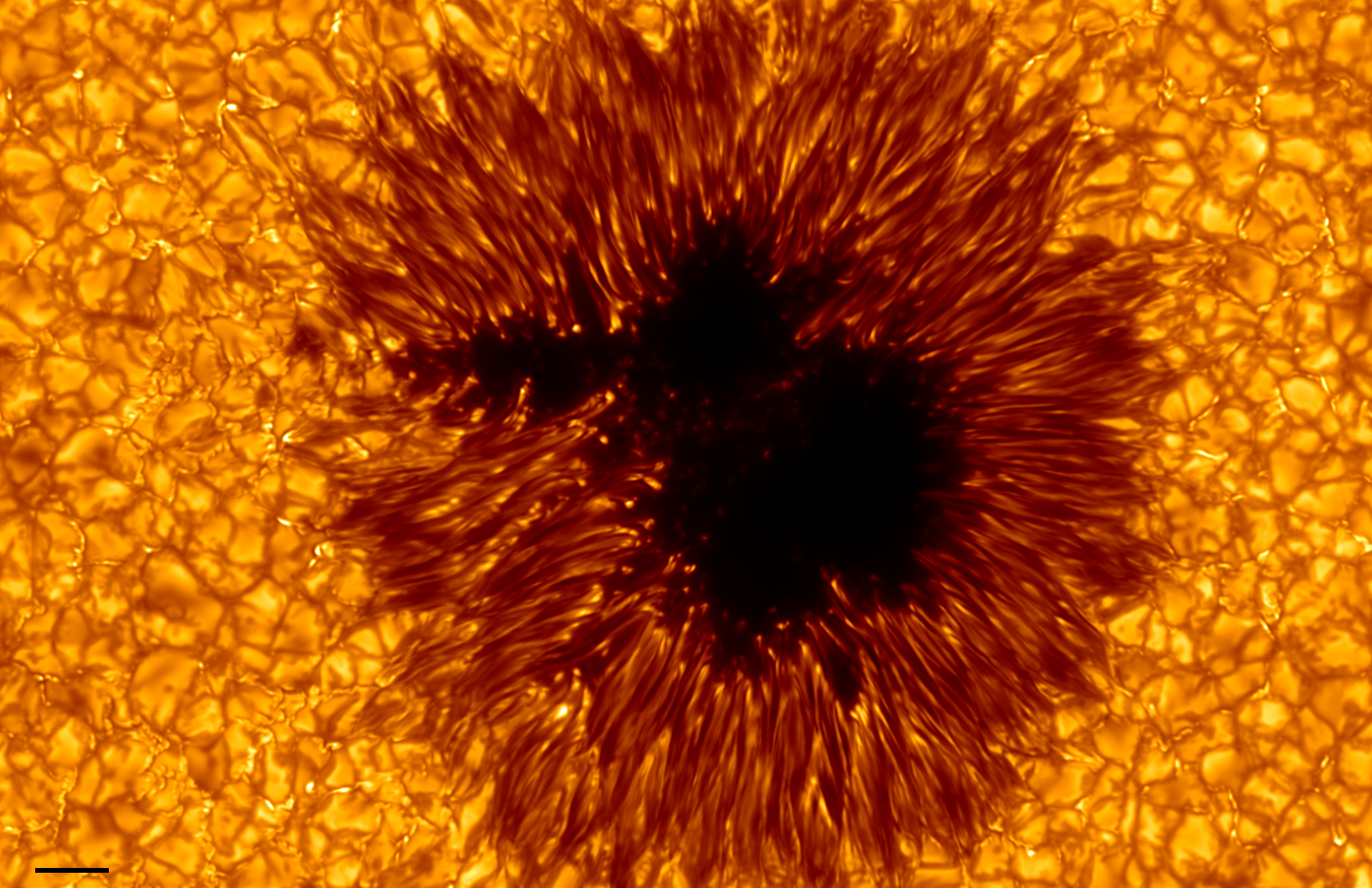

Figure 1. Example of a sunspot. They are the most prominent magnetic structures on the Sun and responsible for eruptive events, like flares and coronal mass ejections. Imaging instruments on EST aim to observe them in one go using large-format sensors with high pixel count. Image credit: L. Rouppe van der Voort (ITA), S. Jafarzadeh (ITA), J. de la Cruz Rodríguez (SU).

Figure 1. Example of a sunspot. They are the most prominent magnetic structures on the Sun and responsible for eruptive events, like flares and coronal mass ejections. Imaging instruments on EST aim to observe them in one go using large-format sensors with high pixel count. Image credit: L. Rouppe van der Voort (ITA), S. Jafarzadeh (ITA), J. de la Cruz Rodríguez (SU).

EST will have a set of instruments that will observe the Sun simultaneously and at complementary spectral regions. This unique approach aims to provide researchers with unprecedented height resolution to understand the solar phenomena and answer long-standing questions in solar physics. Among the multiple systems in a given instrument, there is one that is sometimes considered critical, namely the sensors. They constitute the last item on the optical path and are responsible for converting the incoming light—photons— into information that the solar astronomer can analyse. Hence, they deserve special attention and the EST team, together with experts in the field, has been working on defining the general sensor requirements to fulfill the EST science goals.

Our aim here is to cover some of the sensor elements crucial to our research leaving out the rest of the items that, even being essential, are too specific for this article. Moreover, to illustrate the concepts we have tried to compare most of the requirements to situations of our daily lives in which we use a similar type of sensors, i.e. when we use our smartphones.

The advent of smartphones with excellent photography capabilities allows everyone to capture important moments with very high quality and less effort than in the past. There are multiple reasons for that, one of them the quick evolution of sensor technology. Fortunately, this also happens in the field of astrophysics. We have experienced a massive leap in several areas like the sensor pixel count (the equivalent to the number of megapixels in a smartphone’s camera), the frame rate (some of you probably enjoy taking slow-motion videos), or the dynamic range (similar to the case where you switch on HDR when taking a nice picture of a sunset), among others. These parameters are quickly evolving year after year. With that in mind, we decided to adopt the following strategy. First, we revised the EST Science Requirements Document (available on arXiv) to define the ideal sensor that would meet those requirements. The results of the analysis are summarised below. In a second step, we will interact with companies to check whether that sensor already exists or if it is on their roadmap to have access to them a few years from now.

Before starting, let’s meet the highest limiting factor when performing observations from the ground—the Earth’s atmosphere. It changes rapidly in different ways at various heights (e.g., the wind patterns at the telescope level and 10 km above the telescope are usually completely different). We have adaptive optics systems that measure the atmosphere’s changes and correct them almost in real time, compensating for the atmospheric disturbance. However, the correction, even in the ideal case, still leaves residual effects. Those effects can result in an image that is blurred.

The experience on ground-based telescopes establishes that a fast frame rate can attenuate this effect as you are almost “freezing” the atmosphere during the observation time. Thus, one of the key elements for EST sensors is that they should have an extremely high frame rate. Let’s bring a daily life example of the previous situation. Imagine that you are taking a picture with your smartphone. The shake of your hands (similar to the atmospheric disturbance) can make the image blurred. So, smartphones nowadays have image stabilisation mechanisms (like our adaptive optics system) that reduce to some extent the impact of your shaking hands. However, that needs to be coupled with a high frame rate to effectively freeze the motion of your shaking hands and make it as accurate as possible.

Therefore, we should aim at the highest frame rate possible. However, we need to bear in mind additional specifications that will affect the frame rate considerably. The first one is the pixel count. Current telescopes use sensors with a pixel count between 2000 (2k) or 4k pixels on a side. If we consider an imaging instrument, each pixel corresponds to a spatial region of the Sun. In other words, the more pixels we have in the sensor, the larger the area we can cover on the Sun. This is critical because we can move from imaging just a tiny part of a sunspot (see Figure 1) to observe it entirely at once. The latter is critical if we aim to understand what triggered, for instance, an eruptive event like a flare or a coronal mass ejection.

However, if you record a slow-motion movie you will see that the frame rate is much higher, but the resolution has also dropped. Sensors used in astrophysics show the same behaviour: the larger the pixel count (e.g., a higher smartphone slow-motion resolution), the lower the frame rate. This is the first trade-off we have found. The EST sensors should reach a frame rate of 50-100 frames per second (fps) to freeze the atmosphere, but the pixel count should be at least 4k or even 6k pixels to cover a wide enough solar region, especially in the blue part of the spectrum. Finding a balance between the two requirements seems doable, but now we enter into a new dichotomy. Sensor (or camera) manufacturers offer different frame speed based on the type of shutter used to read the sensor’s pixels. In particular, the two leading technologies are global and rolling shutter. First, let’s try to understand what they are and the implications they could have on EST observations.

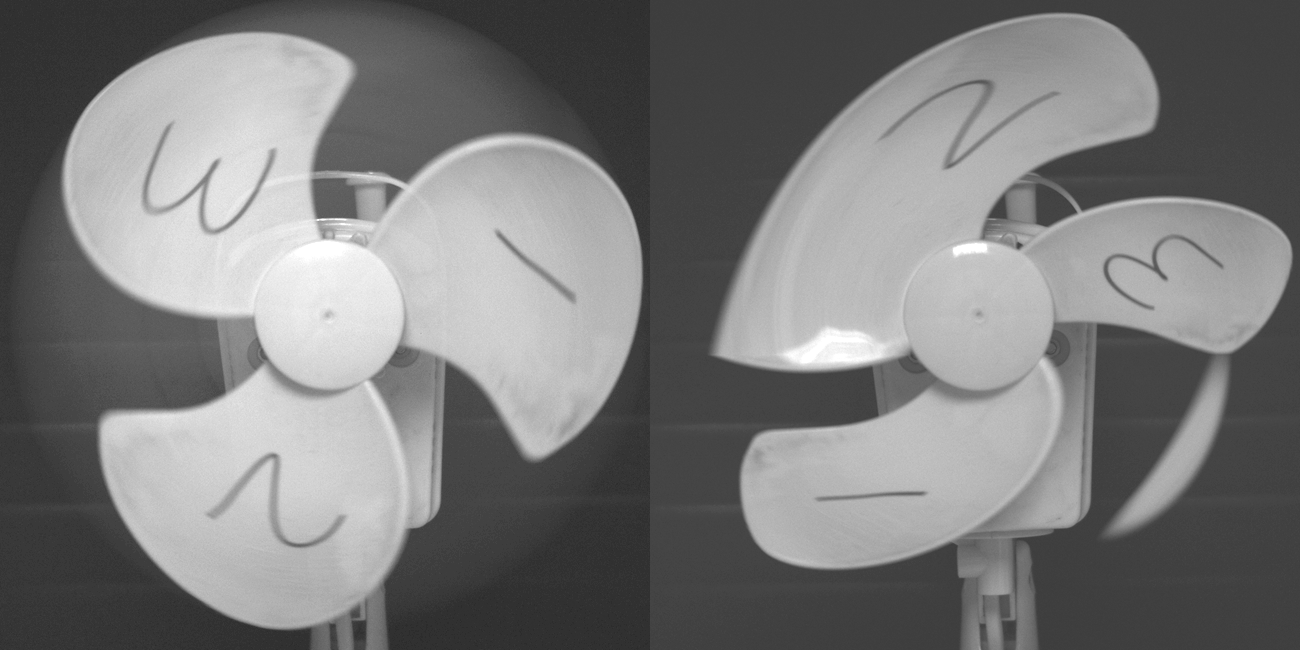

Figure 2. Example of the distortion produced by a rolling shutter when the reading speed is on the order of the speed of the photographed object. Left: Global shutter. Right: Rolling shutter. Image credit: Andor and Oxford Instruments Company.

Figure 2. Example of the distortion produced by a rolling shutter when the reading speed is on the order of the speed of the photographed object. Left: Global shutter. Right: Rolling shutter. Image credit: Andor and Oxford Instruments Company.

Rolling and global shutter refer to how the pixels are read on the sensor. In the case of a global shutter, all the pixels are read strictly simultaneously. In the case of a rolling shutter, the reading of the pixels is done by rows, for instance, from the centre of the sensor towards the edges. Thus, the pixels at the edge of the sensor are read with a delay with respect to those in the central part. This is, in general, a problem when you have moving elements. An example is shown in Figure 2: the distortion of the fan blades on the left when using a rolling shutter is apparent. This situation motivated that, in the past, a global shutter was a requirement for optimal solar observations.

However, recent developments in rolling shutter technology allow reaching high speeds even with 4k sensors (the minimum requirement for EST) up to around 80-100 fps. The same sensor can sometimes work with a global shutter, but the speed of that mode is usually half or less than that obtained with a rolling shutter. So, this opens a new window of consideration for EST. The critical element is that the rolling shutter's effect (or distortion) is related to the object you are observing. The faster the object moves, the worse the distortion (see the fan blades). However, as we said, new sensor technologies are offering much higher speeds for rolling shutter, so we will explore if the EST observations will be affected by any rolling shutter effects when using modern cameras before making a final decision. The primary test we plan to do is to check whether image restoration techniques can be successfully applied using a rolling shutter. We will also evaluate whether the required accuracy of the measurement of the polarisation signals is maintained or not.

Finally, the last simile we want to present is the demanding observing conditions we have when performing spectropolarimetry. This technique aims to detect traces of magnetic fields in the solar atmosphere. Those traces are generally subtle and produce perturbations in the incoming light of the order of 1/1000 of the total radiation. So, no matter how bright you think the Sun is, solar observations are always photon starved. The reason, as said before, is that we need to go as fast as possible to avoid atmospheric disturbance, and we are interested in intensity fluctuations that are a tiny fraction of the total incoming radiation. Think about this situation: you believe that your smartphone’s camera is excellent, but, at the same time, you know that the pictures you take when having dinner in a fancy restaurant with dimmed light are going to be less impressive than what you expect, with a grainy pattern and maybe a bit blurred due to the shake of your hand and the increased exposure time due to the poor lightening conditions.

On top of that, the sensors we are looking for should behave in a way that the noise generated when reading the sensor should be small, and the pixels should be able to capture the dark pattern of the shadows in the restaurant and the bright and relucent smile of your dinner partner as well.

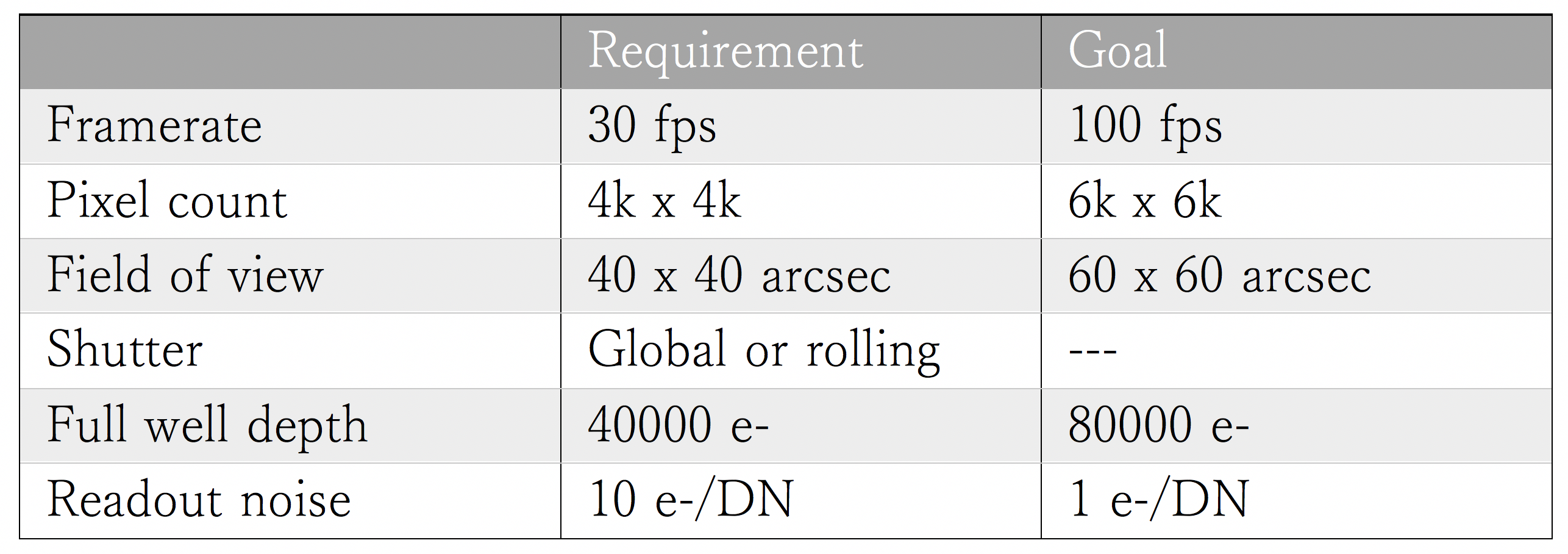

Table 1. General requirements for the sensors of the EST imaging instruments.

Table 1. General requirements for the sensors of the EST imaging instruments.

At this moment, we have defined general sensor requirements that are the ideal ones for fulfilling the EST science goals (see Table 1). The next step is to interact with manufacturers and the instrument developers to check what can be achieved and what is out of reach, but most importantly, to decide where we impose trade-offs to maximise the capabilities of the EST instrument suite. The ultimate goal is to get access to a “universal” sensor that fulfils the requirements of EST instruments. In particular, we wish to have the same sensor in all the imaging instruments to seamlessly cover the solar spectrum from 380-900 nm. The number of sensors for the imaging instrument goes up to 12, so we expect to reduce research and development costs considerably. As the sensors are identical, the budget needed to purchase spare units for maintenance activities is also reduced. Moreover, having only one sensor type makes synchronisation and operation easier. The data reduction software will also be identical, simplifying the software development and future updates. In other words, having a “universal” sensor for all the imaging instruments is a strategic decision that reduces costs and simplifies the operation of the EST instrument suite.

Acknowledgements. We thank Andor and Oxford Instrument Company (especially Dr. C. Coates) for providing the example used in Figure 2, and for the extensive comparison between global and rolling shutter presented in their official website.