Adaptive optics corrects atmospheric turbulence by deforming a mirror (or several) to improve image quality. That means it is necessary to find out how much distortion an image has. There are different methods for doing this, the most novel approach being using artificial neural networks.

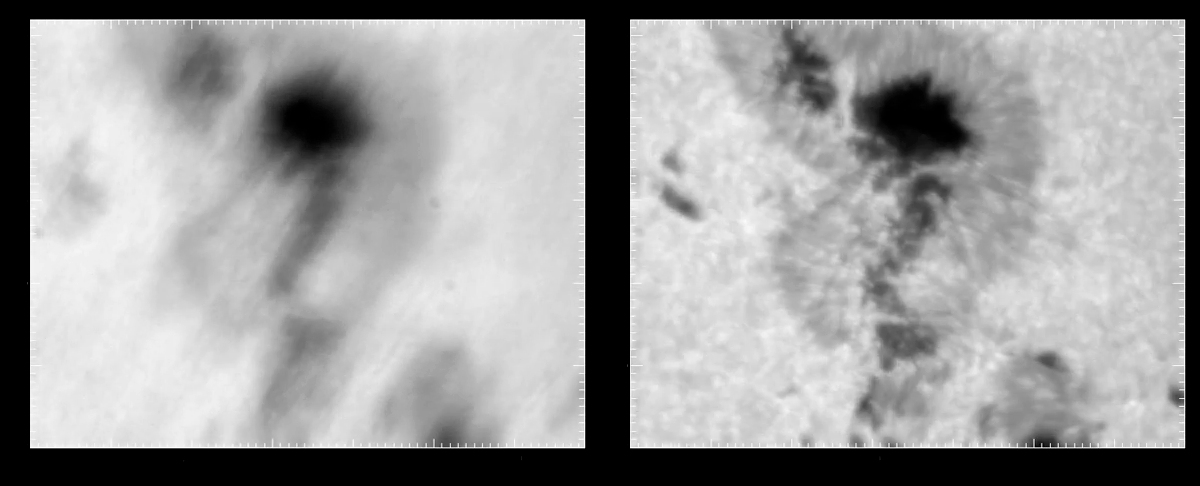

Active region observed at the the German Vacuum Tower Telescope in Tenerife, Spain. On the left, the image before corection. On the right, the image after adaptive optics is applied. For the system to work, it must be able to measure the wavefront aberrations in real time and use them to calculate the mirror deformation needed to correct the image. The EST project is testing artificial neural networks (ANNs) to measure these wavefront errors. / Image: Bruno Sánchez-Andrade Nuño (http://arxiv.org/abs/0902.3174)

Active region observed at the the German Vacuum Tower Telescope in Tenerife, Spain. On the left, the image before corection. On the right, the image after adaptive optics is applied. For the system to work, it must be able to measure the wavefront aberrations in real time and use them to calculate the mirror deformation needed to correct the image. The EST project is testing artificial neural networks (ANNs) to measure these wavefront errors. / Image: Bruno Sánchez-Andrade Nuño (http://arxiv.org/abs/0902.3174)

When sunlight enters the Earth’s atmosphere, it gets distorted by atmospheric turbulence. This changes the shape and morphology of the wavefront, resulting in the telescope capturing blurred and moved images.

To see the image properly, the wavefront errors need to be corrected. In a telescope, this is done by adaptive optics, which accounts for optical aberrations by deforming a mirror (or several, as will be the case for EST) to compensate for them.

To do so, the first step is to measure those wavefront distortions… in real time. The MCAO testbed will test two methods for doing this. The first one is based on standard reconstruction algorithms with Shark-Hartmann sensors. The second one uses those same sensors with artificial neural networks (ANNs).

Training the network

"Neural networks are mathematical algorithms that emulate the way that humans learn" states Javier de Cos, director of the Instituto Universitario de Ciencias y Tecnologías del Espacio de Asturias (Spain). This means that they learn by watching examples and adjusting themselves. In this case, examples showing the effects that different known turbulence profiles produce on the solar images received by the telescope.

To create these examples, scientists use an "artificial" image of the Sun, a very high-resolution, computer-generated image including all the different phenomena likely to be observed with the telescope. Then, they deform that perfect image passing it through a known turbulence profile, generating a distorted image. Given the original and perturbed images, the neural network is tasked with figuring out the turbulence profile.

“We use millions of examples, so the network can get the grip of the process. Once trained, we validate it: we feed it with new images deformed by randomly generated turbulence profiles” , explains de Cos, who oversees the development of the neural networks for EST. “If the network gets them right, it has learnt. If not, it might have overlearnt ―meaning that it might have memorised inputs instead of finding connections― and needs retraining”, he concludes.

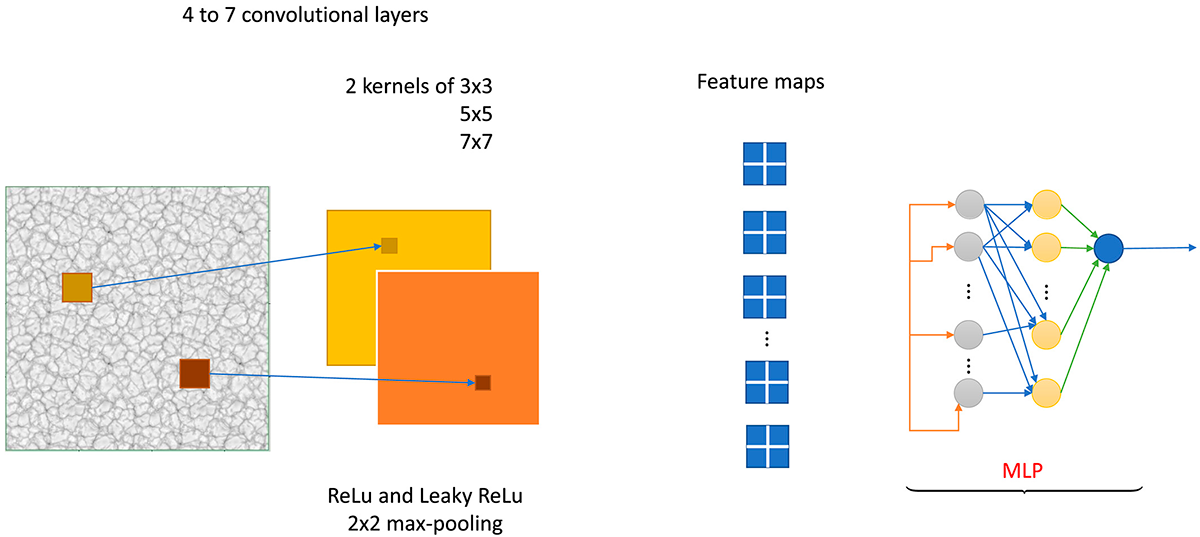

Flowchart of the process. The images taken by the Shark-Hartmann sensor are employed as input images for the reconstructor based on convolutional neural networks. The images of all of the subapertures of the Shark-Hartmann sensor are processed together. The pixel output of the convolutional layers is the input layer of the top multilayer perceptron (MLP). / Image: Sánchez Lasheras et al. (2020; see reference at the bottom of the page)

Flowchart of the process. The images taken by the Shark-Hartmann sensor are employed as input images for the reconstructor based on convolutional neural networks. The images of all of the subapertures of the Shark-Hartmann sensor are processed together. The pixel output of the convolutional layers is the input layer of the top multilayer perceptron (MLP). / Image: Sánchez Lasheras et al. (2020; see reference at the bottom of the page)

A novel approach

The EST neural network is being trained to find out the turbulence parameters. These parameters are then used by the system to calculate how much the MCAO mirrors should be deformed in order to compensate for the wavefront aberrations (actually, the network could be trained to directly move the mirrors. However, the turbulence profile is not the only parameter used to calculate the mirror deformation).

Once trained, the network is a black box, meaning there is no way of knowing what it is really doing (the network is constantly adjusting itself, the same way our brains do). Although this can produce some concerns, it also means that, once in use, it is always learning and improving.

"Using artificial neural networks for multi-conjugated adaptive optics is a very novel approach, and this is one of the first attempts at using them in solar telescopes", points out de Cos. "Simulations are promising but we're now entering the most delicate part: checking them in the EST MCAO testbed”.

|

Artificial neural networks: mimicking the human brain |

Neural networks are usually utilised to find how several known parameters combine to get a certain result. That means that if we know that an image Z is the result of the image X being deformed by a turbulence profile Y, the network will be able to tell us how X and Z are related, and therefore to calculate Y. We just need to train it with enough examples.

There are different types of neural networks. The simpler are single-layer perceptron networks, that given certain inputs will find the relative weight that each of these have for a certain output. In multilayer networks, the results from one layer are processed by the next one and so on, allowing for more complex operations.

The approach being tested for EST uses several layers of convolutional neural networks (a special, very complex multilayer network designed to deal with well-structured inputs with strong spatial dependencies) topped with a multilayer perceptron. "The wavefront sensor is just a camera that produces an image, and the convolutional network is able to discriminate the most relevant aspects of it, ignoring the rest. This means that the final multilayer perceptron needs only to process the differential aspects produced by those specific turbulence conditions, speeding up the process”, outlines Javier de Cos.

More information:

- Sánchez Lasheras, F, Ordóñez, C, Roca‐Pardiñas, J, de Cos Juez, FJ. Real‐time tomographic reconstructor based on convolutional neural networks for solar observation. Math Meth Appl Sci. 2020; 43: 8032.

- Riesgo F.G. et al. Early Fully-Convolutional Approach to Wavefront Imaging on Solar Adaptive Optics Simulations. In: de la Cal et al. (eds), Hybrid Artificial Intelligent Systems. HAIS 2020. Lecture Notes in Computer Science 2020, 12344. Springer.